Alohomora

Table of Contents:

- 1. Deadline

- 2. Phase 1: IMU Attitude Estimation

- 3. Phase 2: Waypoint Navigation and AprilTag Landing

- 4. Submission Guidelines

- 5. Allowed and Disallowed functions

- 6. Collaboration Policy

- 7. Acknowledgements

1. Deadline

11:59:59 PM, August 24, 2023.

2. Phase 1: IMU Attitude Estimation

2.1. Problem Statement

In this phase, you will implement three methods to estimate the three dimensional orientation/attitude. You are given data from an ArduIMU+ V2, a six degree of freedom Inertial Measurement Unit (6-DoF IMU) sensor i.e., readings from a 3-axis gyroscope and a 3-axis accelerometer. You will estimate the underlying 3D orientation and compare it with the ground truth data given by a Vicon motion capture system.

2.2. Reading the Data

The Phase1\Data folder has two subfolders, one which has the raw IMU data Phase1\Data\Train\IMU and another one which has the Vicon data Phase1\Data\Train\Vicon. The data in each folder is numbered for correspondence, i.e., Phase1\Data\Train\IMU\imuRaw1.mat corresponds to Phase1\Data\Train\Vicon\viconRot1.mat. Download the project 0 package from here which includes the data in Phase1 folder. These data files are given in a .mat format. In order to read these files in Python, use the snippet provided below:

>>> from scipy import io

>>> x = io.loadmat("filename.mat")

This will return data in a dictionary format. Please disregard the following keys and corresponding values: __version__, __header__, __global__. The keys: vals and ts are the main data you need to use. ts are the timestamps and vals are values from the IMU. Each column of vals denotes data in the following order: \(\begin{bmatrix} a_x & a_y & a_z & \omega_z & \omega_x & \omega_y\end{bmatrix}^T\). Note that these values are not in physical units and need to undergo a conversion.

To convert the acceleration values to \(ms^{-2}\), follow these steps.

\[\tilde{a_x} = \frac{a_x + b_{a,x}}{s_x} \\\]Follow the same steps for \(a_y\) and \(a_z\). Here \(\tilde{a_x}\) represents the value of \(a_x\) in physical units, \(b_{a,x}\) is the bias and \(s_x\) is the scale factor of the accelerometer.

To read accelerometer bias and scale parameters, load the Data\IMUParams.mat file. IMUParams is a \(2 \times 3\) vector where the first row denotes the scale values \(\begin{bmatrix} s_x & s_y & s_z \end{bmatrix}\). The second row denotes the biases (computed as the average biases of all sequences using vicon) \(\begin{bmatrix} b_{a, x} & b_{a, y} & b_{a, z} \end{bmatrix}\).

To convert \(\omega\) to \(rads^{-1}\),

\[\tilde{\omega} = \frac{3300}{1023} \times \frac{\pi}{180} \times 0.3 \times \left(\omega - b_{g}\right)\]Here, \(\tilde{\omega}\) represents the value of \(\omega\) in physical units and \(b_g\) is the bias. \(b_g\) is calculated as the average of first few hundred samples (assuming that the IMU is at rest in the beginning).

From the Vicon data, you will need the following 2 keys: ts and rots. ts is the timestamps of size \(1 \times N\) as before and rots is a \(3\times 3\times N\) matrix denoting the Z-Y-X Euler Angles rotation matrix estimated by Vicon.

An image of the rig used for data collection is shown below:

2.3. Sensor Calibration

Note that the registration between the IMU coordinate system and the Vicon global coordinate system might not be aligned at start. You might have to align them.

The Vicon and IMU data are NOT hardware synchronized, although the timestamps ts of the respective data are correct. Use ts as the reference while plotting the orientation from Vicon and IMU. You can also do a software synchronization using the time stamps. You can align the data from the two closest timestamps from IMU and Vicon data respectively or interpolate using Slerp. You can use any third party code for alignment of timestamps.

2.4. Implementation

- You will write a function that computes orientation only based on gyro data (using integration, assume that you know the initial orientation from Vicon). Check if that works well. Plot the results and verify. Add the plot to your report file. For references, watch the video here and read the tutorial here. Feel free to explore other resources to learn these concepts. Usage of ChatGPT is also encouraged as long as you do not blatantly plagiarize.

- You will write another function that computes orientation only based on accelerometer data (assume that the IMU is only rotating). Verify if that function works well before you try to integrate them into a single filter. Add the plot to your report file.For references, watch the video here and read the tutorial here . Feel free to explore other resources to learn these concepts. Usage of ChatGPT is also encouraged as long as you do not blatantly plagiarize.

- You will write a third function that uses simple complementary filter to fuse estimates from both the gyroscope and accelerometer. Make sure this works well before you implement the next step. You should observe the estimates to be almost an average of the previous two estimates. Report the value of fusion factor \(\alpha\) in your report. Also, add the plot to your report file.For references, watch the video here and read the tutorial here . Feel free to explore other resources to learn these concepts. Usage of ChatGPT is also encouraged as long as you do not blatantly plagiarize.

In the starter code, a function called rotplot.py is also included. Use this function to visualize the orientation of your output. To plot the orientation, you need to give a \(3 \times 3\) rotation matrix as an input.

3. Phase 2: Waypoint Navigation and AprilTag Landing

3.1. Problem Statement

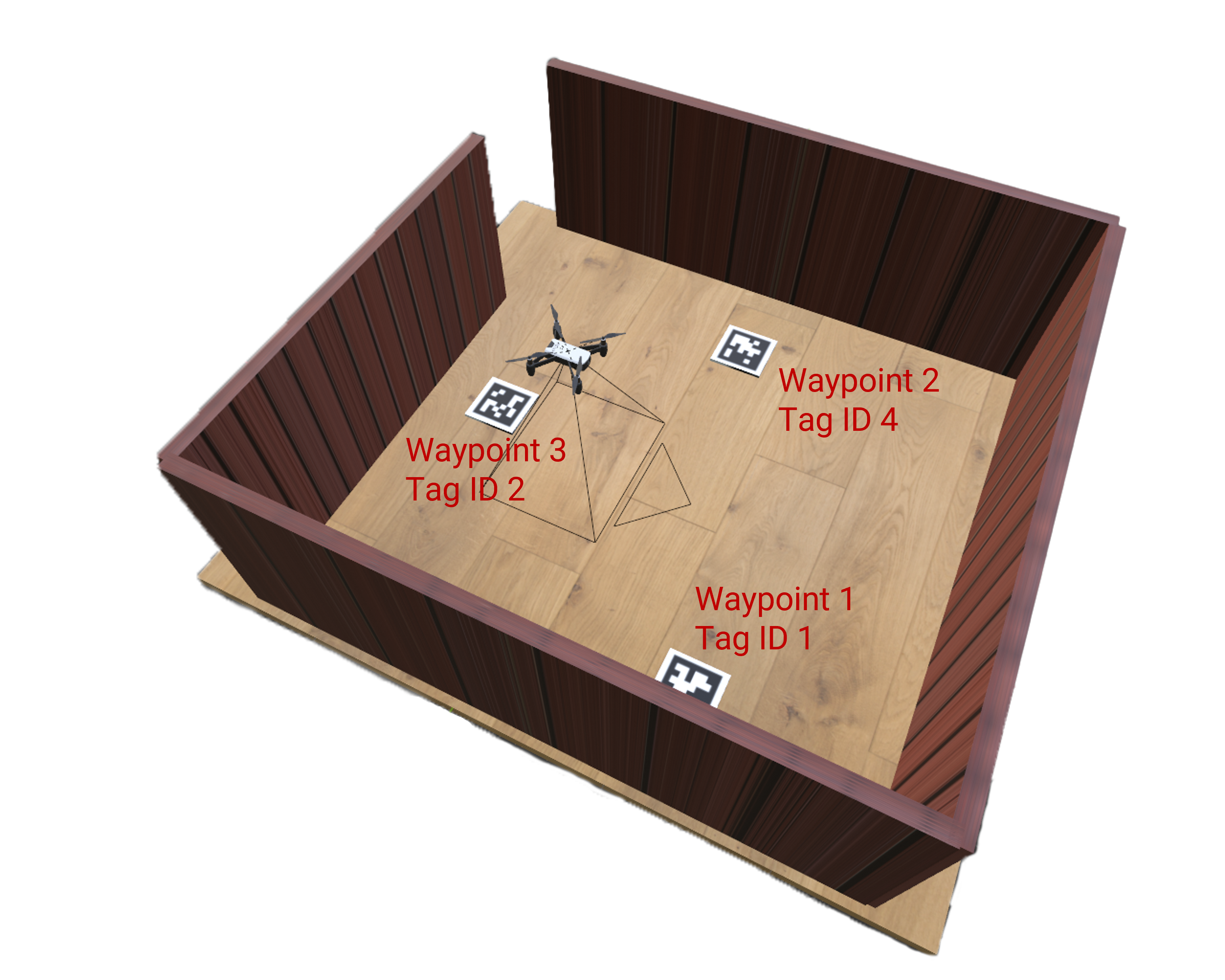

In this phase, you will design and implement a navigation and landing system for a simulated quadrotor in an environment. The quadrotor is equipped with the ability to navigate to the provided 3D position waypoint. You are required to autonomously navigate through a sequence of predefined waypoints, perform AprilTag scanning after you reach every waypoint, and execute a landing maneuver on an AprilTag with the ID value of 4, note that you still have to navigate through all waypoints for completion of the mission. For e.g., if you have 3 waypoints with Tag ID’s (unknown before) as 1,4,2 (See Fig. 2). You will navigate to waypoint 1 and then continue to waypoint 2, then land on waypoint 2, takeoff again and continue to waypoint 3 for completion.

3.2. Codebase

To setup the codebase, install Blender 3.6 from the official website. Further install the following python libraries: imath numpy opencv-python scipy pyquaternion. The codebase and the .blend file are included in the project 0 package from Sec. 2.2 in the Phase2 folder.

For running the code, please watch this video, also attached below from your awesome TA Manoj. If you are super new to Blender, you can also check out this awesome Blender tutorial by Ramana which was made for the Computer Vision class.

3.3. Implementation

You need to implement the following:

- A state machine to monitor and supply the next waypoint. Note that you are given a template starter code in

Phase2\src\usercode.pywith the template classstate_machine. You are required to fill thestep()function of this class, where you need to monitor if you have reached the current desired waypoint (you will need to check the Euclidean distance between the current position given ascurrposin yourstep()function and the desired waypoint) and switch to the landing/next waypoint based on the April Tag ID. To run the code you’ll run thePhase2\src\main.pyand NOTPhase2\src\usercode.py. - AprilTag detection for next task to be performed. Your quadrotor is equipped with a down-facing camera and you are provided with a function

fetchLatestImage()that returns the current camera frame. You can use utilize theapriltaglibrary to detect april tags, note that you are given tags from the36h11tag family. Once you reach a waypoint, fetch the latest camera frame, detect the April Tag ID, if it is4, invoke a land command. To land, you have to generate a waypoint with the sameXYlocation andZlocation of 0.1m. Once you have landed, you can takeoff again, i.e., maintain sameXYlocation and make theZlocation 1.5m. Then continue onto the next waypoint if any. If there are no more waypoints left, terminate your code and dance (in real-life)! Note that you do not need the images to test your waypoint switching logic but you’ll need the image to know when to land. So you can test your state switching code without landing without the need to render images, this will make your debugging fast.

4. Submission Guidelines

If your submission does not comply with the following guidelines, you’ll be given ZERO credit.

4.1. File tree and naming

Your submission on ELMS/Canvas must be a zip file, following the naming convention YourDirectoryID_p0.zip. If you email ID is abc@wpi.edu, then your DirectoryID is abc. For our example, the submission file should be named abc_p0.zip. The file must have the following directory structure. The file to run for the phase 1 of the project should be called YourDirectoryID_p1a/Code/Wrapper.py and for phase 2 it should be main.py as shown in the file structure below. You can have any helper functions in sub-folders as you wish, be sure to index them using relative paths and if you have command line arguments for your Wrapper codes, make sure to have default values too. Please provide detailed instructions on how to run your code in README.md file.

NOTE: Please DO NOT include data in your submission. Furthermore, the size of your submission file should NOT exceed more than 100MB.

The file tree of your submission SHOULD resemble this:

YourDirectoryID_p0.zip

├── Phase1

| └── Code

| ├── Wrapper.py

| ├── Any subfolders you want along with files

| └── Report.pdf

|

├── Phase2

| ├── log

| ├── src

| | ├── usercode.py

| | ├── main.py

| | └── Other supporting files

| ├── models

| ├── outputs

| ├── indoor.blend

| ├── main.blend

| └── Video.mp4

└── README.md

4.2. Report for Phase 1

For each section of the project, explain briefly what you did, and describe any interesting problems you encountered and/or solutions you implemented. You must include the following details in your writeup:

- Your report MUST be typeset in LaTeX in the IEEE Tran format provided to you in the

Draftfolder and should of a conference quality paper. Feel free to use any online tool to edit such as Overleaf or install LaTeX on your local machine. - Link to the

rotplotvideos comparing attitude estimation using Gyro Integration, Accelerometer Estimation, complementary filter and Vicon. Sample video can be seen here. - Plots for all the train and test sets. In each plot have the angles estimated from gyro only, accelerometer only, complementary filter and Vicon along with proper legend.

- A sample report for a similar project is given in the

.zipfile given to you with the nameSampleReport.pdf. Treat this report as the benchmark or gold standard which we’ll compare your reports to for grading.

4.3. Video for Phase 2

The video should be a screen capture of your code in action from both an oblique and top view as shown in the video below. Note that a screen capture is not a video recorded from your phone.

5. Allowed and Disallowed functions

Allowed:

- Any functions regarding reading, writing and displaying/plotting images in

cv2,matplotlib - Basic math utilities including convolution operations in

numpyandmath - Any functions for pretty plots

- Quaternion libraries

- Any library that perform transformation between various representations of attitude

- Any code for alignment of timestamps

- Usage of ChatGPT (or any other LLM) is allowed as long as you include the prompts used in your report and blatantly do not plagiarize from ChatGPT (includes copy pasting entire code)

Disallowed:

- Any function that implements in-part or full Gyroscope integration, accelerometer attitude estimation, Complementary filter including usage of LLMs such as ChatGPT to write code for this

If you have any doubts regarding allowed and disallowed functions, please drop a public post on Piazza.

6. Collaboration Policy

NOTE: You are STRONGLY encouraged to discuss the ideas with your peers. Treat the class as a big group/family and enjoy the learning experience.

However, the code should be your own, and should be the result of you exercising your own understanding of it. If you reference anyone else’s code in writing your project, you must properly cite it in your code (in comments) and your writeup. For the full honor code refer to the RBE595-F02-ST Fall 2023 website.

7. Acknowledgements

This data for this fun project was obtained by the ESE 650: Learning In Robotics course at the University of Pennsylvania.